环境2台服务器,系统版本centos:7.9, k8s版本1.27.4

192.168.87.130 master

192.168.87.129 node001

1、环境需要关闭防火墙, 关闭selinux,关闭sawp分区,ntp时间同步

1.1、关闭swap分区

swapoff -a #临时关闭

sed -ri ‘s/.*swap.*/#&/’ /etc/fstab #永久关闭

1.2、ntp时间同步

yum install -y ntpdate

ntpdate ntp3.aliyun.com #加入定时任务

*/5 * * * * ntpdate ntp3.aliyun.com #5分钟执行一次

2、hosts文件写入如下内容,3台都配置

cat >> /etc/hosts <<EOF

192.168.87.130 kube-master #ip + hostname

192.168.87.129 kube-node001

EOF

3、安装docker引擎,这里我直接写了个脚本,2台都执行。

#!/bin/bash

if [ "$UID" -ne 0 ];then

echo -e "\033[33mWarn: must be root to run this script.\033[0m"

exit 1

fi

if [ `echo $#` != '1' ];then

echo -e "\033[33mWarn: use $0 ['docker_hasten_url']\033[0m"

exit 1

fi

DOCKER_HASTEN_URL=$1

function check_stage(){

local stage_name=$1

if [ `echo $?` == '0' ];then

echo -e "\033[32m${stage_name} stage ---> exec success.\033[0m"

else

echo -e "\033[31m${stage_name} stage ---> exec fail.\033[0m"

exit 1

fi

}

function update_docker_repo01(){

echo -e "\033[32mLog ---> exec init docker yum repo and install docker-ce......\033[0m"

echo " "

yum install -y yum-utils device-mapper-persistent-data lvm2 wget >& /dev/null && \

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo >& /dev/null && \

sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo && \

yum makecache fast >& /dev/null && \

yum -y install docker-ce >& /dev/null || yum -y install docker-ce >& /dev/null || echo -e "\033[31mError ---> 01 yum deployment failure due to configuration or network reasons.\033[0m"

}

function update_docker_repo02(){

echo " "

echo -e "\033[32mLog ---> exec init docker yum repo and install docker-ce......\033[0m"

sed -i "/keepcache=0/i\sslverify=0" /etc/yum.conf && yum clean all \

yum install -y yum-utils device-mapper-persistent-data lvm2 wget >& /dev/null && \

wget -O /etc/yum.repos.d/docker-ce.repo https://download.docker.com/linux/centos/docker-ce.repo --no-check-certificate >& /dev/null && \

sed -i 's+download.docker.com+mirrors.tuna.tsinghua.edu.cn/docker-ce+' /etc/yum.repos.d/docker-ce.repo && \

yum makecache fast >& /dev/null && \

yum -y install docker-ce >& /dev/null || yum -y install docker-ce >& /dev/null || echo -e "\033[31mError ---> 02 yum deployment failure due to configuration or network reasons.\033[0m"

}

function update_docker_repo(){

update_docker_repo01 || update_docker_repo02

}

function docker_start(){

echo -e "\033[32mLog ---> exec docker-ce start and enable......\033[0m"

echo " "

systemctl start docker && systemctl enable docker >& /dev/null

check_stage 'docker_start'

}

function config(){

local docker_hasten_url=$1

echo -e "\033[32mLog ---> exec init docker config......\033[0m"

echo " "

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["${docker_hasten_url}"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

systemctl daemon-reload && systemctl restart docker >& /dev/null

check_stage 'config'

}

function check_docker(){

local STR=$(systemctl status docker | awk 'NR==3'| awk -F "[()]" '{print $2}')

rpm -qa|grep "docker-ce" >& /dev/null

if [ `echo $?` -eq "0" ];then

echo -e "\033[32mLog ---> docker install success!\033[0m"

else

echo -e "\033[31mErroe ---> docker install fail!\033[0m"

exit 1

fi

if [ $STR = running ];then

echo " "

echo -e "\033[32mLog ---> docker start success!\033[0m"

else

echo -e "\033[31mError ---> docker start fail!\033[0m"

exit 1

fi

}

function main(){

update_docker_repo && sleep 1s

docker_start && sleep 1s

config $DOCKER_HASTEN_URL

check_docker

}

main

4、安装cri-dockerd

下载地址:https://github.com/Mirantis/cri-dockerd/releases/tag/v0.3.4

cd /opt && wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.4/cri-dockerd-0.3.4-3.el7.x86_64.rpm # 安装 rpm -ivh cri-dockerd-0.3.4-3.el7.x86_64.rpm #修改system vi /usr/lib/systemd/system/cri-docker.service ExecStart=/usr/bin/cri-dockerd --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.7 #启动 systemctl daemon-reload && systemctl restart docker cri-docker systemctl status docker cri-docke

5、k8s部署前初始化系统

cat <<EOF | tee /etc/modules-load.d/k8s.conf overlay br_netfilter EOF modprobe overlay modprobe br_netfilter # 设置所需的 sysctl 参数,参数在重新启动后保持不变 cat <<EOF | tee /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.ipv4.ip_forward = 1 EOF # 应用 sysctl 参数而不重新启动 sysctl --system lsmod | grep br_netfilter lsmod | grep overlay #查看 sysctl net.bridge.bridge-nf-call-iptables net.bridge.bridge-nf-call-ip6tables net.ipv4.ip_forward

6、部署k8s,2台都执行

#!/bin/bash

function k8s_repo_update(){

cat > /etc/yum.repos.d/k8s.repo << EOF

[kubernetes]

name=kubernetes

enabled=1

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

EOF

cd /etc/yum.repos.d/ && wget https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg --no-check-certificate && \

rpm --import rpm-package-key.gpg && yum repolist

}

function install_start(){

yum -y install kubeadm-1.27.4 kubectl-1.27.4 kubelet-1.27.4 && \

systemctl enable kubelet.service && systemctl status kubelet.service && \

echo "1" >/proc/sys/net/bridge/bridge-nf-call-iptables

}

main(){

k8s_repo_update && install_start

}

main

7、初始化k8s

kubeadm init \ --apiserver-advertise-address=192.168.87.130 \ --image-repository registry.aliyuncs.com/google_containers \ --kubernetes-version v1.27.4 \ --service-cidr=10.100.0.0/12 \ --pod-network-cidr=172.10.0.0/16 \ --cri-socket unix:///var/run/cri-dockerd.sock

8、初始化完后操做(master主机上配置)

# 非root用户请执行 mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config # root用户直接执行 export KUBECONFIG=/etc/kubernetes/admin.conf # 临时生效,重启后失效,不推荐。 echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile # 永久生效,执行kubeadm reset后再次init也无需再次执行这条命令 source ~/.bash_profile # 执行永久生效命令之后需要source一下使其生效 echo $KUBECONFIG # 检测配置是否生效

9、node节点加入master,在node节点上执行

#master初始化完最下面那一串提示 kubeadm join 192.168.122.85:6443 --token i5bm4t.r5nezgh84j738rgh --discovery-token-ca-cert-hash sha256:2b95805195915a9ef75c84e91cf8e5fbbfbed2a1b60e1c7bd4773c6727d9bedf --cri-socket unix:///var/run/cri-dockerd.sock ps:注意,如果master的token过期了,就在master执行:kubeadm token create --print-join-command重新生成有效的token在执行。一般token有效时间为24小时。 #测试集群是否部署成功。 kubectl get nodes kubectl get cs /etc/kubernetes/manifests/ ps:如果status都是NotReady,这是正常的,应为没有部署网络插件。

10、部署网络组件flannel

kubectl apply -f kube-flannel.yaml

apiVersion: v1

kind: Namespace

metadata:

labels:

k8s-app: flannel

pod-security.kubernetes.io/enforce: privileged

name: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: flannel

name: flannel

namespace: kube-flannel

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: flannel

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

- apiGroups:

- networking.k8s.io

resources:

- clustercidrs

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: flannel

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "172.10.0.0/16", #pod网段

"Backend": {

"Type": "vxlan" #网络模式选择

}

}

kind: ConfigMap

metadata:

labels:

app: flannel

k8s-app: flannel

tier: node

name: kube-flannel-cfg

namespace: kube-flannel

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: flannel

k8s-app: flannel

tier: node

name: kube-flannel-ds

namespace: kube-flannel

spec:

selector:

matchLabels:

app: flannel

k8s-app: flannel

template:

metadata:

labels:

app: flannel

k8s-app: flannel

tier: node

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

containers:

- args:

- --ip-masq

- --kube-subnet-mgr

command:

- /opt/bin/flanneld

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

image: docker.io/flannel/flannel:v0.22.0

name: kube-flannel

resources:

requests:

cpu: 100m

memory: 50Mi

securityContext:

capabilities:

add:

- NET_ADMIN

- NET_RAW

privileged: false

volumeMounts:

- mountPath: /run/flannel

name: run

- mountPath: /etc/kube-flannel/

name: flannel-cfg

- mountPath: /run/xtables.lock

name: xtables-lock

hostNetwork: true

initContainers:

- args:

- -f

- /flannel

- /opt/cni/bin/flannel

command:

- cp

image: docker.io/flannel/flannel-cni-plugin:v1.1.2

name: install-cni-plugin

volumeMounts:

- mountPath: /opt/cni/bin

name: cni-plugin

- args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

command:

- cp

image: docker.io/flannel/flannel:v0.22.0

name: install-cni

volumeMounts:

- mountPath: /etc/cni/net.d

name: cni

- mountPath: /etc/kube-flannel/

name: flannel-cfg

priorityClassName: system-node-critical

serviceAccountName: flannel

tolerations:

- effect: NoSchedule

operator: Exists

volumes:

- hostPath:

path: /run/flannel

name: run

- hostPath:

path: /opt/cni/bin

name: cni-plugin

- hostPath:

path: /etc/cni/net.d

name: cni

- configMap:

name: kube-flannel-cfg

name: flannel-cfg

- hostPath:

path: /run/xtables.lock

type: FileOrCreate

name: xtables-lock

11、测试集群

kubectl get nodes kubectl create deployment nginx --image=nginx:1.7.9 #创建deploy kubectl expose deployment nginx --port=80 --type=NodePort #创建svc kubectl get pods,svc #查看nginx的pods和svc curl node地址加nginx的svc暴露的端口进行访问nginx。 #测试etcd curl -k --cert /etc/kubernetes/pki/etcd/healthcheck-client.crt --key /etc/kubernetes/pki/etcd/healthcheck-client.key https://192.168.87.130:2379/metrics

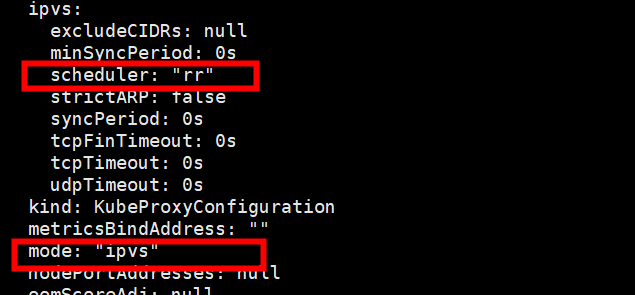

12、修改kube-proxy为ipvs模式

kubectl edit cm kube-proxy -n kube-system

重启 kubectl delete pod kube-proxy-z67fm -n kube-system

查看 yum -y install ipvsadm && ipvsadm -Ln

启用ipvs而不使用iptables的原因 ipvs 可以更快地重定向流量,并且在同步代理规则时具有更好的性能。此外,ipvs 为负载均衡算法提供了更多选项,例如: rr :轮询调度 lc :最小连接数 dh :目标哈希 sh :源哈希 sed :最短期望延迟 nq : 不排队调度

13、如果node也想使用master功能操作

scp /etc/kubernetes/admin.conf 192.168.87.129:/etc/kubernetes/ # 到node节点检查admin.conf文件是否传输完成 cd /etc/kubenetes && ls admin.conf # 将admin.conf加入环境变量,使其永久生效。 echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile source ~/.bash_profile #node使用 kubectl get pod